27 January 2021

Why I blog about apparent problems in science

21 January 2021

Some apparent problems in a high-profile study of ultra-processed vs unprocessed diets

The study

The authors recruited 20 volunteers, 10 male and 10 female, and kept them under observation for 28 days in an in-patient environment at the NIH Clinical Center in Bethesda, Maryland. The data show that between one and four people were in the facility at any point between the first admission on April 17, 2018 and the last recorded data collection on November 19, 2018.

Participants spent 14 days on each of two diets, one described as "ultra-processed" and the other as "unprocessed". The diets were presented on a 7-day rotation, so each participant ate the same meal twice, 7 days apart. Although the purpose of the study was to examine the effect of an "ultra-processed" diet, and that term tends to be used in nutrition science with a specific meaning that is different from "processed" (it's complicated), I will use the terms "processed" and "unprocessed" to distinguish between the two, which I hope will avoid any confusion that might be caused by the fact that "ultra-processed" and "unprocessed" both start with the same letter. The participants were randomised to receive the processed diet first (N=10, 6 male, 4 female) or the unprocessed diet first (N=10, 4 male, 6 female); after 14 days on one diet they immediately switched to the other, as shown here.

The study code and data

The authors have made their data and SAS analysis code available in an OSF repository here. There are two datasets, named ADLDataSAScode and ADLDataSAScode1, each in its own ZIP file. The only difference between these seems to be that ADLDataSAScode1, which was uploaded on August 20, 2019 (three months after the article was first published online, which was on May 16, 2019), contains one extra data file, and the code has been extended with a few lines to produce a table from that file (more on this later). All of the analyses in this post refer to the ADLDataSAScode1 dataset.

The SAS code is not, as one might have hoped, a run-once script that generates all of the tables and figures from the article. Indeed, as supplied, the main script file (ADLDocumentation1.sas) produces two runtime errors at line 61 because the variables created within the SAS data file DLW at lines 42 and 43 are lost when this file is overwritten twice at lines 45 and 46. It seems that the code is best regarded as a collection of "building blocks" of code that can be run individually, possibly with minor modifications to use different subsets of the data. However, for completeness, I patched up the code so that it would run without error messages, and also to include both the original and adjusted analyses of the figures from Table 3D (see "The adjusted weight data", below), and ran it in SAS University edition. I have made the resulting code ("Nick-ADLDocumentation1.sas") and output ("(Annotated) Results_Nick-ADLDocumentation1.pdf") files available online (see "My code and data", below).

The exact length of the study

An issue that stands out immediately when one looks at any of the data files containing daily records is that there seems to be a fencepost error. Participants spent 14 days on each of two diets, with no break in between; their weight at the start of day 1 was the baseline for the first phase (processed or unprocessed diet, assigned at random), and their weight at the start of day 15 was the baseline for the second phase, when they received the other diet. It would seem, therefore, that they should have been weighed 29 times—once at the very start of the study, and then 28 more times after eating a day's worth of meals each time—but there are only 28 daily weight records for each participant. That is, we apparently do not know the effect on their weight of the last (14th) day of the second diet, because the last measurement of their weight on that second diet was apparently the one made on the morning of the 14th day (their 28th in the study), before they proceeded to eat their food and undergo whatever other measurements were performed on that day. This seems to make little sense, from the standpoint of either study design or ethics. Why feed your participants the controlled diet on the last day if you are not going to collect weight data from them relating to that day?

In fact this problem seems to be exacerbated because, as the data files deltabc and deltabw show, the difference in weight retained for each participant on both diets was the difference between their weights at the start of the first and 14th day on that diet. That is, even for the first diet that each person followed, their final weight was the weight at the start of the 14th day in the study, not that at the start of the 15th day; and the effect of the meals that they consumed on the 14th day of the study is also essentially disregarded.

Which days did participants spend in the respiratory chamber?

Participants spent one day per week in a respiratory chamber to enable their energy expenditure to be studied in detail. The article states that "On the chamber days, subjects were presented with identical meals within each diet period, and those meals were not offered on non-chamber days" (p. 72), which makes sense from an experimental control point of view, in that all participants would have consumed the same food on that day. The article's Supplemental Information [PDF, 21MB] further states (on pp. 15, 16, 17, 37, 38, and 39) that the chamber day was day 5 of each weekly meal rotation, corresponding to days 5 and 12 of each participant's time on each diet.

However, the great majority of the records in the data file chamber appear to contradict this. I looked for precise matches between the recorded energy intake on the chamber days and the records for each participant in the dailyintake file, and found exactly one match for each participant and chamber day. Support for the idea that these matches are not coincidental is provided by the fact that the calendar dates of each record of the matched pairs (one in chamber and one in dailyintake) are identical. The matched records imply that of the 80 chamber days (20 participants x 2 diets x 2 chamber days per diet), only 7 took place on day 5 of the weekly meal rotation (whereas 2 were on day 1, 24 on day 3, 3 on day 4, 31 on day 6, and 13 on day 7). Furthermore, of the 40 pairs of chamber days within the same diet, 15 were on different meal rotation days within the pair (e.g., for participant ADL002 on the unprocessed diet, the chamber days were 3 and 8, corresponding to the third and first days of the meal rotation, respectively), meaning that the participant would have eaten different meals on their two chamber days for a given diet in 37.5% of cases. It is difficult to reconcile these records with the claims in the article and supplemental information.

[KH... The article and supplement do not claim that “participants did indeed all spend days 5 and 12 of each diet in the chamber”. Rather, the main manuscript describes that participants spent one day each week in the respiratory chambers but does not specify the days of the week. The Supplementary Materials provide information about the rotating 7-day menu of meals provided on each diet and the chamber days were listed as occurring on day 5 of each week. This was not intended to indicate that the chamber days only occurred on day 5 but rather that the meals provided during the chamber days were prespecified and did not vary between subjects on the same diet no matter what day the chamber days occurred. The clinical protocol (available on the OSF website) indicates in Appendix A that the proposed schedule (page 34) had chamber days planned for days 3 and 10 on each diet. However, the protocol also notes on pages 13-14 that “Every effort will be made to adhere to the proposed timelines, but some flexibility is required for scheduling of other studies, unanticipated equipment maintenance, etc. Scheduling variations will not be reported.” Thus, while chamber days varied to accommodate such scheduling challenges, the meals provided on the chamber days were constant within each diet. ...KH]

Counting the calories

The data file dailyintake contains information about the amount of calories and individual nutrients consumed by the participants on each day. The total number of calories consumed is reported to two decimal places, but the individual readings of calories for protein, fat, and carbohydrates that sum to that total are reported to six decimal places, which on visual inspection do not appear to contain any regular patterns (which might correspond to, say, recurring decimals).

It is not clear how such numbers could have been generated, however, as the process for calculating the amount of calories consumed presumably ought to have been a fairly simple multiplicative one, based on estimates of the numbers of grams of protein, fat, and carbohydrates in the uneaten portions of each food that was offered, after deducting an estimate of the amount of water. (Edward Archer's comment on PubPeer mentions this issue, and suggests that using a bomb calorimeter might have been a better way to measure energy intake, although this doesn't seem to address the split into macronutrient types.) The authors report that the diets were designed and analyzed using ProNutra software, made by Viocare of Princeton, NJ. I wrote to Viocare to ask how this software calculate calories from macronutrients—for example, whether it uses the Atwater values of 4.0 kcal/g for protein and carbohydrates and 9.0 kcal/g for fat, and whether it typically generates long mantissas in its output. Its founder and president, Rick Weiss, sent me this reply:

ProNutra’s standard nutritional database is from USDA which we load into ProNutra with the resolution as USDA provides. Typically a research group using ProNutra would round off to the decimal place that they need. So I agree, seeing a value to the 6th decimal doesn’t make sense. The analysis of calories from macronutrients does use Atwater values.

[KH... More specifically, ProNutra uses specific Atwater factors which can deviate from the general values of 4.0 kcal/g for protein and carbohydrates and 9.0 kcal/g for fat. Therefore, the assumption immediately below is invalid. ...KH]

But if the calories per gram are always integers, the presence of six decimal places of precision in the macronutrient information of every meal would seem to imply that the authors calculated the amount of food that was (a) served and (b) remained uneaten to the nearest microgram, which would require rather a lot of effort.

[KH... The six decimal points for the macronutrient kcals in the data files are easily explained. The data for the total energy consumed and the percentage from each macronutrient were provided to 2 decimal places. For example, 15.68% of energy consumed as protein and a total energy intake of 2003.47 kcal. Therefore, the kcal provided from protein was calculated to six decimal places in the data file as follows: 2003.47*0.1568 = 314.144096 kcal from protein. ...KH]

I also wonder what was done in the case of processed snacks, where one would expect the authors to have simply used the nutrition information provided by the manufacturers.

[KH... The assumption that we used manufacturer provided nutrition information is not correct. As indicated in the manuscript, nutrient information was obtained from the USDA standard reference databases or if an item was not found in that database, we pulled from the Food and Nutrition Database for Dietary Studies, (also through the USDA). ...KH]

For example, on four days of the processed diet, three participants (ADL006 on days 3 and 4, ADL007 on day 8, and ADL015 on day 9) are recorded in the data file intakebymeal as having consumed 403.14 kcal in snacks, with 42.007956, 202.218222, and 158.933010 kcal coming from protein, fat, and carbohydrates respectively (these amounts are precisely identical on all four days). The chances that three people left exactly the same amount of snack food unfinished on a total of four occasions would seem to be negligible, so this duplication presumably corresponds to these participants having completely finished the contents of the same combination of snack packages on each day. But the nutrition information for each of these packaged snacks reports the amount of macronutrients with a precision of 1 g, so the calories from each of these macronutrients ought also to be an integer (a multiple of 4 or 8), unless the authors perhaps contacted the manufacturers and obtained analyses down to the microgram level.

A further problem here is that these records show that the three participants in question consumed more calories in the form of fat versus carbohydrates from their snacking on these four days, but substantially fewer calories from protein versus carbohydrates. The only processed snack in the image on p. 24 of the Supplemental Information that has more calories from fat than from carbohydrates is the 28 g package of Planters salted peanuts (see my file snacks.xls), but this also has more calories from protein than from carbohydrates. I have not been able to identify any combination of packaged snacks that would get even close to the proportions of calories from protein, fat, and carbohydrates that is reported for these four participants, especially given the presumed constraint of counting only entire packages.

[KH... The combination of foods that result in these proportions of calories from protein, fat, and carbohydrates was indicated above: 28g, 39 g and 113 g for peanuts, cheese & peanut butter crackers, and applesauce, respectively.

As an approximate calculation using general Atwater factors, we have:

- Peanuts 28 g providing 163.8 kcal, 6.63 g protein, 13.9 g fat, 6.02 g carbohydrates

- Cheese & Peanut butter crackers 39 g providing 191.88 kcal, 4.21 g protein, 9.55 g fat, 23.01 g carbohydrates

- Applesauce 113 g providing 47.46 kcal, 0.19 g protein, 0.11 g fat, 12.74 g carbohydrates

When summed, these snacks provide 403.1 kcal, 11.03 g protein (44.12 kcal using general Atwater factor), 23.56 g fat (212.04 kcal using general Atwater factor), 41.77 gm carbohydrates (167.08 kcal using general Atwater factor). Thus, most of the total calories come from fat, followed by carbs, and then protein. ...KH]

The participants

Participants are identified in the data by sequentially numbered labels from ADL001 through ADL021. That represents a span of 21 unique values, but there are no records with the label ADL011. Whether this is due to an error in assigning a label or a participant dropping out is not clear; however, there is no mention in the article of anyone dropping out of the study.

[KH... ADL011 declined to participate in the study after their successful screening visit when they were assigned their subject number. No participants dropped out or were withdrawn from the study after admission. ...KH]

Participant ADL006 (male) had a baseline BMI of 18.050 kg/m², which is below the minimum specified in the inclusion criteria on pp. e1–e2 of the article (18.5 kg/m²). That is, on the authors' own terms it seems that he ought to have been excluded from the study.

[KH... This participant met inclusion criteria at their screening visit, but their starting BMI was lower once admitted for the study. ...KH]

Participant ADL020 (female) had a baseline BMI of 26.853. During her 14 days on the unprocessed diet she consumed an average of just 836 kcal/day and lost a total of 4.3 kg (9.4 lbs) in weight, accounting on her own for nearly a quarter (23.7%) of the total weight loss of the sample on the unprocessed diet. On day 12 of the same diet she obtained 22% of her calories (128 kcal out of 578 kcal total) from carbohydrates, which was the lowest daily percentage of any participant on any day on either diet in the entire study, whereas on the next day, day 13, she obtained 62% of her energy intake (602 kcal out of 962 kcal total) calories from carbohydrates, which was the highest daily percentage of any participant on any day on either diet in the entire study. This combination of extraordinary weight loss, very low levels of energy intake, and highly variable eating patterns make me wonder how much we can generalise from this participant to a broader understanding of the effects of different types of diet on the wider population. It seems to me that some kind of Hawthorne-type effect may have been present here.

[KH... The limitations of our study regarding generalizability were discussed in the manuscript. It is well-known in human nutrition research that individual subjects have large day-to-day diet variability and that there is large individual variability in weight loss. ...KH]

Errors in the data for individual participants

Participant ADL010 has a baseline (day 1, unprocessed diet) weight of 91.97 kg in the data file deltabw but 93.17 kg in the data file baseline. This affects, at least, the results shown in Table S1. If 91.97 kg is the correct weight then the Total mean for weight is correct but the Male mean (79.2 reported, 79.0 actual) and Male SE (6.6 reported, 6.5 actual) are not. If 93.17 kg is correct then the Male mean and SE are correct, but the Total mean (78.2 reported, 78.3 actual) isn't. I have not evaluated the effect of this discrepancy on the headline results of the study, but given that the total weight loss of all 20 participants on the unprocessed diet was 18.07 kg, a difference of 1.40 kg would seem to be potentially quite important.

ADL010's weight on day 2 (versus day 1) is recorded as 93.17 kg in deltabw, so one possibility is that for this participant only, the copying process that generated the baseline table somehow picked up the day 2 value rather than the day 1 value. Interestingly, according to that same file, this participant's weight fell back again to exactly 91.97 kg on day 3, which seems like quite a strong yo-yo effect.

Other oddities in the data

The adjusted weight data

Update1: Body composition changes presented in Figure 3D are adjusted for 14 days because the body compositions were not measured exactly 14 days apart. In the previous version of SAS code and data, such adjustment was not provided. Here we have updated the SAS code at the section "data for figure 3D" and added a dataset "DeltaBCadj14";

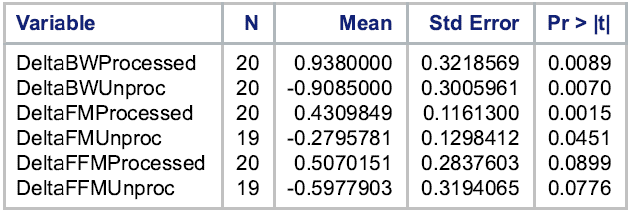

- First, it would be interesting to know what the adjustment process was. It seems to have been quite powerful, because some of the differences between the original and adjusted values are substantial. For example, for participant ADL014, the loss in weight on the unprocessed diet has been adjusted from 0.10 kg to 0.95 kg, and for ADL005 the equivalent loss has gone from 0.26 kg to 1.79 kg; participant ADL019's gain of 0.30 kg on the unprocessed diet has been adjusted to a loss of 0.24 kg, while participant ADL021's loss of 0.30 kg on the processed diet has been adjusted to a gain of 0.16 kg. These changes appear to affect principally the fat-free mass rather than the fat mass, which in numerous cases (8 out of 20 on the processed diet, 2 out of 19 on the unprocessed diet) is identical to two decimal places after adjustment. For example, participant ADL010's original weight gain of 3.60 kg on the processed diet becomes 2.69 kg in the adjusted file, but his fat mass did not change at all.

- Second, if the authors believe that these adjusted figures provide a better estimate of the effects of the diets, one might wonder why they have not submitted a correction, updating the claims about weight loss that featured in the abstract of their article, rather than allowing this important new information to languish in an OSF repository. Otherwise it is not clear what the point of performing these "adjusted" analyses was.

Conclusion

My code and data

Acknowledgements

Note on copyright

Footnotes

(*) I have put these terms in quote marks to emphasise that they have a specific technical meaning. I don't know if that it a good idea, though; perhaps it looks like I am putting Dr Evil-style air quotes around them. That isn't my intention.